Check out my writing and presentations about civic tech, digital transformation, disaster management and more.

-

Making the Libertarian Party Viable in New York City

-

Taiwan’s Radical Participatory Democracy Training is Coming to New York

-

“The Open Aid Movement” Presented at NVOAD Conference May 9th, 2018

-

For Government, It’s DSO or Die

-

Debrief: My 2017 Campaign for NYC Public Advocate

-

A More Transparent City, with a Page for Every Capital Project

-

“Big City” Libertarianism

-

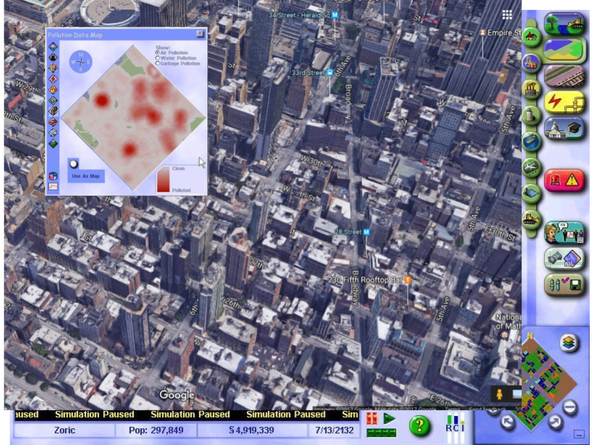

Imagining SimNYCity

-

It’s Time for a “Participatory” Democracy Instead of our “Consumer” One